@kas1e

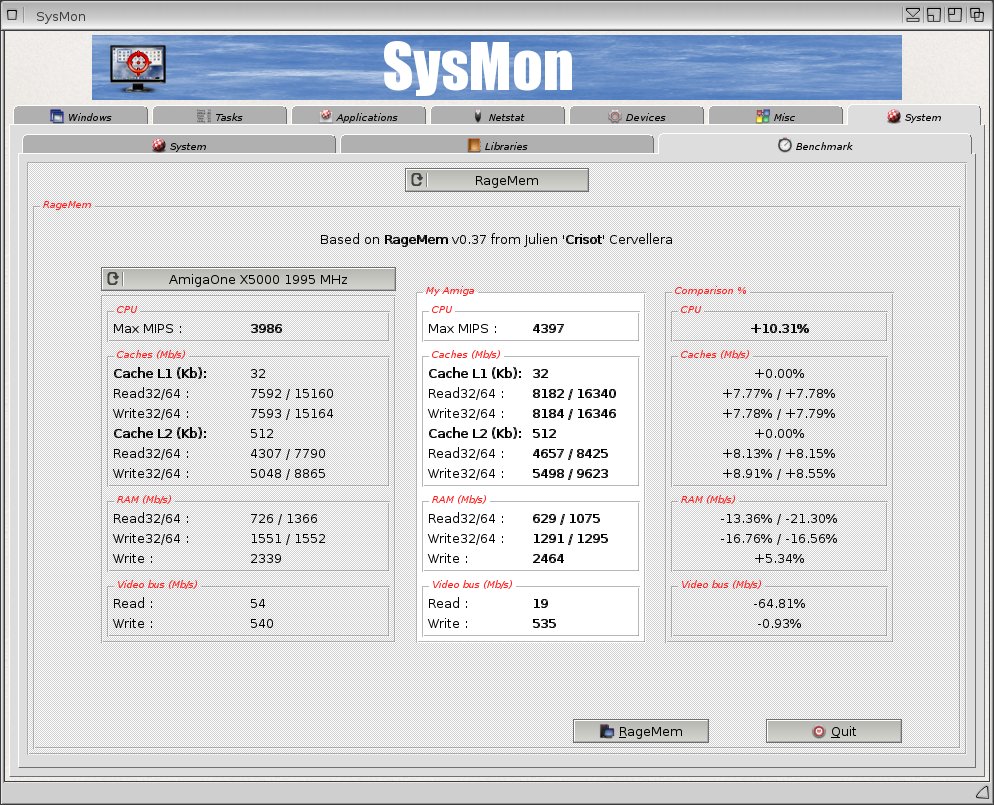

I've dug a bit deeper into the subject and it all makes sense to me now. So here is what I know so far. (But you can also skip directly to the end for the performance values )

The technology:The first implementation of dual channel memory on PCs merged two 64bit DDR3 buses into one 128bit bus (as was my understanding of this technology until today). This doubles the bandwidth of the memory bus. The timing of both DIMMs had to be closely matched for the 128bit bus to work properly. But as it turned out, most consumer applications saw little benefit of a 128bit DDR3 memory bus. So, the 128bit bus idea was dropped and replaced with technology that divided (interleaved) the memory access over the two channels (e.g., the even access on channel 1 and the odd access on channel 2). Since Both channels operate in a concurrent fashion, and the fact that an internal access must wait for the ( by comparison) slow DDR3 interface anyways. The effective memory bandwidth is doubled again.

The difference with the former 128bit wide dual channel bus is that the bus remains 64bit for the consumer applications but at twice the perceived speed. And as a result, a lot of those applications saw a considerable increase of performance. Another advantage is that the DIMMs operate independent from each other. So, timing doesn't have to be closely matched anymore. Only the memory layout needs to be the same. It is still preferable that both DIMMs have a similar timing. But this is not required. The slowest timing determines the timing for both DIMMs.

Now back to the X5000. The P5020 supports two tricks to increase the effective bandwidth of DDR3 memory accesses. The first trick is controller interleaving and the second one is rank interleaving.

Controller interleaving:P5020 controller interleaving supports 4 modes (cache-line, page, bank and super-bank). The controller cache-line interleaving mode is basically the same as modern dual channel technology on PCs. The DDR3 memory access with the size of a cache line is divided (interleaved) over the two available memory controllers. This effectively doubles the memory bandwidth for an application on the X5000 too. The other two modes are like rank interleaving and are meant to reduce latencies. But cache-line interleaving will provide the most performance benefit by far.

Rank (chip select) interleaving:First a bit background on the internals of DDR3 memory. DDR3 DRAM is organized in multiple two-dimensional arrays of rows and columns called banks. A row (also called a page) in a DDR3 DIMM bank has a total length of 8 Kbyte (for 8x 8bit DDR3 chips) and must be opened, by means of an activation command, before the individual bytes in an 8kbyte page can be accessed with column addressing. So, the same page in the same bank for all 8 8bit DDR3 chips in the same rank are opened at once. When access (read/write) to the page columns is completed, the complete row/page must be closed with a pre-charge command before you can open the next row/page with a new activation command.

Rank interleaving reorganizes the memory addressing in such a way that the consecutive memory address is not on the next row in the same bank of the rank but on the same row/page of the same bank on the next rank (chip select). One activation command opens the same row in the same bank across all chip selects. In case of dual rank memory with 2 chip selects and 8x 8bit chips on each chip select, the effective row/page size doubles from 8kbyte to 16kbyte. The benefit of this addressing method is that it reduces the amount of row/page activation and closing commands and therefore reducing the average read/write latency. This results in lower overall access time and therefore an increase of effective bandwidth. (Note that the DIMM is still 64bit. So, while the activation and closing commands can be send simultaneously to both ranks, still only 64 bits of data can be accessed at the same time).

The impact of the memory controller and DIMM itself on overall memory performance of the X5000 can be ranked from high to low in the following order:

1. Cache line controller interleaving

2. Rank interleaving

3. Faster timing of the DIMM itself. (Lower latencies with one or two clocks has less impact than omitting commands with latencies of >10 clocks)

Normally, the clock speed of the DDR3 DIMM would matter as well for the true latency of a DIMM, but the X5000/20 is limited to just 666MHz/1333MT/s. The DIMMs:It turns out that the CPU-Z screenshots in my previous post only tell half of the story. In the early days of DDR3 DIMMs, DDR3 chips with the double density were often more than twice as expensive compared to two DDR3 chips of half the density (for the same annual quantity of DIMMs). So, it was more economical to fit 4GB DIMMs with two ranks of 2GB each. When the price of DDR3 silicon went down, the package price became dominant. So, manufacturers started to fit their DIMMs with single ranks of 4GB because that was now the most economical configuration. Unfortunately, this happened often using the same sku.

So, the CPU-Z screenshots are not wrong. They simply do not apply to the DIMM of kas1e (and mine) anymore. I verified this by removing the heat spreader on my 4GB corsair DIMM and it contains indeed 8x 8bit DDR chips (only one side is fitted). So, a single rank. And that is why uboot produced the error message on rank interleaving. Because you cannot interleave ranks with just a single rank available on your DIMM. The DIMM of Skateman is an 8GB DIMM. This DIMM has 8x 8bit DDR3 chips on both sides of the DIMM (16 chips total). This 128bit in total is divided over two ranks. That's why rank interleaving works for Skateman.

Since the DIMMS of both kas1e and Skateman run on similar SPD JEDEC timing, we can already notice that rank interleaving results in about 11% higher write speeds in rage mem.

The first post in this thread shows that controller interleaving results in about 65% higher write speeds in rage mem.

The result of faster DIMM timing is a bit trickier to predict because we currently cannot control the latencies like on a PC BIOS/UEFI. Uboot spl simply takes the values from the SPD eeprom (often JEDEC defined CL9-9-9-24 at 1333MT/s) on a DIMM and applies them to the memory controller. And these so called JEDEC timings are more relaxed compared to the maximum capability of the DIMM. But fortunately, DIMMs like the Kingston Fury Beast 1866MT modules come with more optimized timing values in their SPD EEPROM (CL8-9-8-24 instead of JEDEC CL9-9-9-24 at 1333MT/s). So, the modules are not necessarily faster than equal DIMMs from other manufacturers but uboot will configure the P5020 memory controller with faster timing from the SPD eeprom.

Test result:For the sake of amiga science, I've bought both the Fury Beast single rank (2x4GB) and dual rank (2x8Gb) kits.

Memory modules used in this test:

- Corsair Vengeance LP 2x4GB DDR3-1600 Single Rank (CML8GX3M2A1600C9) -> CL-9-9-9-24@1333MT/s

- Kingston Fury Beast 2x4GB DDR3-1833 Single Rank (KF318C10BRK2/8) -> CL-8-9-8-24@1333MT/s

- Kingston Fury Beast 2x8GB DDR3-1833 Dual Rank (KF318C10BBK2/16) -> CL-8-9-8-24@1333MT/s

Here are the ragemem results with their Uboot initialization output:

Corsair Vengeance LP:

DRAM: Initializing....using SPD

Detected UDIMM CML8GX3M2A1600C9

Detected UDIMM CML8GX3M2A1600C9

Not enough bank(chip-select) for CS0+CS1 on controller 0, interleaving disabled!

Not enough bank(chip-select) for CS0+CS1 on controller 1, interleaving disabled!

6 GiB left unmapped

8 GiB (DDR3, 64-bit, CL=9, ECC off)

DDR Controller Interleaving Mode: cache line

Read32: 665 MB/Sec

Read64: 1195 MB/Sec

Write32: 1429 MB/Sec

Write64: 1433 MB/Sec

Kingston Fury Beast 2x4GB (Single rank):

DRAM: Initializing....using SPD

Detected UDIMM KF1866C10D3/4G

Detected UDIMM KF1866C10D3/4G

Not enough bank(chip-select) for CS0+CS1 on controller 0, interleaving disabled!

Not enough bank(chip-select) for CS0+CS1 on controller 1, interleaving disabled!

6 GiB left unmapped

8 GiB (DDR3, 64-bit, CL=8, ECC off)

DDR Controller Interleaving Mode: cache line

Read32: 682 MB/Sec

Read64: 1225 MB/Sec

Write32: 1479 MB/Sec

Write64: 1483 MB/Sec

Kingston Fury Beast 2x8GB (Dual rank):

DRAM: Initializing....using SPD

Detected UDIMM KF1866C10D3/8G

Detected UDIMM KF1866C10D3/8G

14 GiB left unmapped

16 GiB (DDR3, 64-bit, CL=8, ECC off)

DDR Controller Interleaving Mode: cache line

DDR Chip-Select Interleaving Mode: CS0+CS1

Read32: 685 MB/Sec

Read64: 1261 MB/Sec

Write32: 1638 MB/Sec

Write64: 1644 MB/Sec

---------: --- CL9 SR --|---- CL8 SR ----| ---- CL8 DR ----

Read32 : 665 ( base ) | 682 ( +2.6% ) | 685 ( +3.0%)

Read64 : 1195 (base) | 1225 ( +2.5%) | 1261 ( +5.5%)

Write32: 1429 (base) | 1479 ( +3.5%) | 1638 (+14.6%)

Write64: 1433 (base) | 1483 ( +3.5%) | 1644 (+14.7%)

Conclusion:As predicted, the controller interleaving mode gives the biggest boost in performance (Write: ~+65% ; see post #1). Rank interleaving comes second (Write: ~+10.9%) and improved timing (CL9 -> CL8 ; Write +3.5%) comes third. But I am sure that I could have pushed this module further if our uboot would allow for manual editing. At CL6 timing we could see a similar boost in performance as for rank interleaving alone.

Donate

Donate